Editor's note: Jay Shah, a deep learning developer at Statsbot, takes you on a journey through neural networks to explore popular types such as autoencoders, convolutional neural networks (CNNs), and recurrent neural networks (RNNs).

Today, neural networks are widely used in various business applications, including sales forecasting, customer research, data validation, and risk management. At Statsbot, we leverage these powerful models for time series prediction, anomaly detection, and natural language understanding.

In this article, we will introduce what neural networks are, discuss the main challenges beginners face when working with them, and explain the most popular types of neural networks and their real-world applications. We’ll also explore how they can be applied across different industries and sectors.

Understanding Neural Networks

The term "neural network" has recently gained a lot of attention in the field of computer science, sparking interest among many people. But what exactly is a neural network, how does it work, and is it really useful?

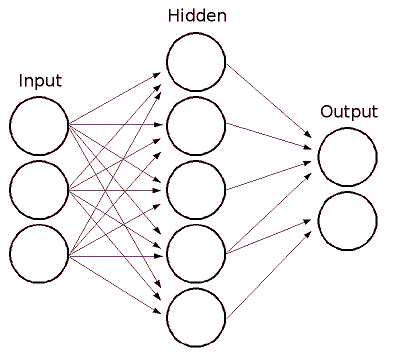

At its core, a neural network consists of layers of computational units called neurons, which are interconnected. These networks process data and eventually produce an output, such as a classification. Each neuron multiplies the input value by a weight, sums it with other inputs, adjusts it using a bias, and then applies an activation function to normalize the result.

The Learning Process

One of the key features of neural networks is their iterative learning process. During training, the network processes each data sample one by one, adjusting the weights associated with the inputs. This process continues until all samples have been processed, allowing the network to improve its predictions over time.

Neural networks offer several advantages, such as robustness to noisy data and the ability to classify patterns that were not explicitly trained on. The backpropagation algorithm is one of the most widely used methods for training these networks.

Once the network architecture is defined for a specific application, the training process begins. Initially, the weights are assigned randomly. Then, the network processes the training set, compares the actual output with the expected one, and adjusts the weights accordingly through error propagation.

This cycle repeats as the network learns from the data, gradually improving its accuracy. As the weights are refined, the same dataset may be processed multiple times during training.

What Makes It Hard?

One of the biggest challenges for beginners is understanding what happens at each layer of the network. After training, each layer extracts higher-level features from the input data, with the final layer determining the classification. But how exactly does this happen?

Instead of specifying every detail, we let the network make decisions. For example, we can pass an image to the network and ask it to analyze it. Then, we can choose a specific layer and ask the network to enhance the features it detects at that level. Since each layer handles different levels of abstraction, the complexity of the features depends on which layer we focus on.

Popular Neural Network Types and Applications

In this beginner’s guide to neural networks, we’ll cover autoencoders, convolutional neural networks (CNNs), and recurrent neural networks (RNNs).

Autoencoders

An autoencoder is based on the idea that random initialization isn’t ideal. Instead, pre-training each layer using unsupervised learning can lead to better initial weights. An example of such an approach is Deep Belief Networks. Recent studies have revived this area, such as variational autoencoders using probabilistic methods.

Although rarely used in practical applications, techniques like batch normalization allow for deeper networks, enabling us to train very deep models from scratch. With proper constraints, autoencoders can learn more meaningful representations than traditional methods like PCA.

Let’s look at two practical applications of autoencoders:

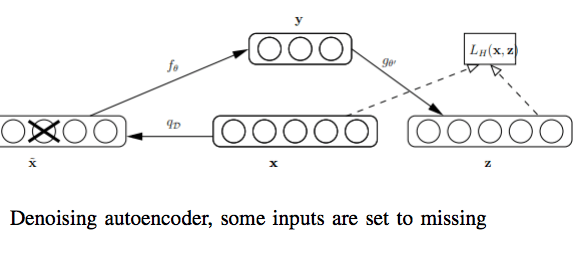

A denoising autoencoder with convolutional layers can efficiently remove noise from images.

Denoising Autoencoder – Some Inputs Are Set to Be Missing

In the figure above, the random destruction process sets some inputs to zero, forcing the denoising autoencoder to predict the missing values. This helps the model learn to reconstruct the original input from corrupted data.

Data visualization often uses dimensionality reduction techniques like PCA and t-SNE. Both help improve model accuracy by reducing the complexity of the input space. However, the performance of MLP models heavily depends on the network structure, data preprocessing, and the nature of the problem being solved.

Convolutional Neural Networks (CNNs)

CNNs take their name from the "convolution" operation, which is used to extract features from images. By applying filters to small regions of the input, CNNs preserve spatial relationships and learn hierarchical feature representations.

Some successful applications of CNNs include:

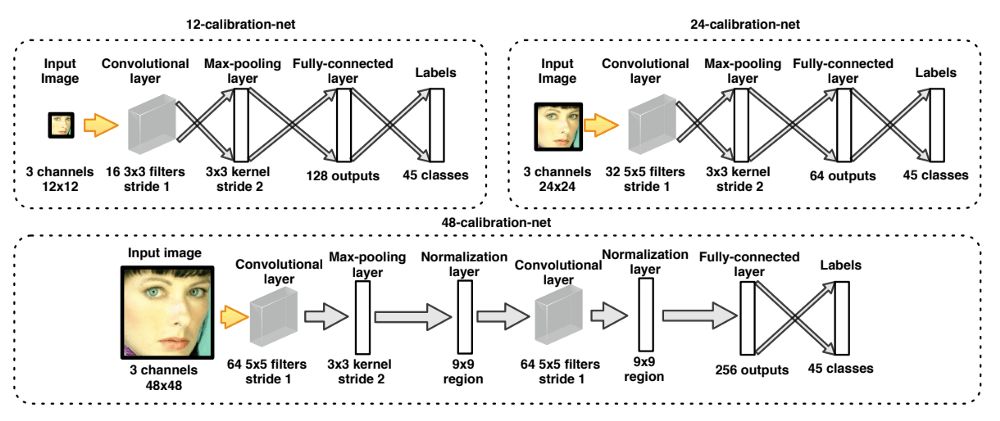

A study by the Stevens Institute of Technology and Adobe used a concatenated CNN for fast face recognition. The decoder first evaluates the image at low resolution to identify potential face areas, then processes those regions at higher resolution for accurate detection.

The cascade structure also includes a calibration network to speed up identification and improve bounding box quality.

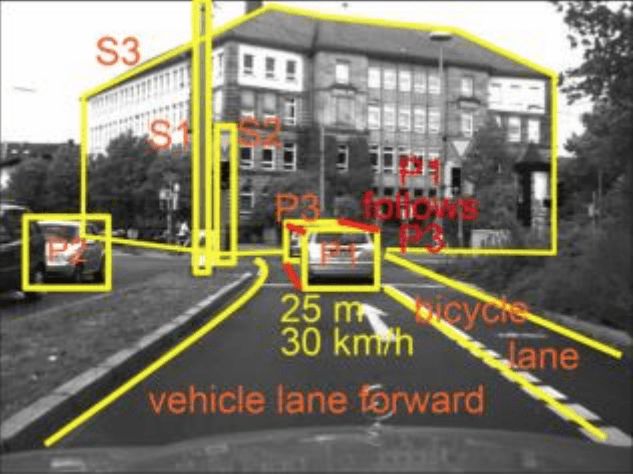

In autonomous driving projects, CNNs are used to estimate depth of field, ensuring safety for passengers and other vehicles. For example, NVIDIA uses CNNs in self-driving car projects.

CNNs are highly flexible due to their multi-parameter processing capabilities. Subclasses include deep belief networks. Traditionally, CNNs are used for image analysis and object recognition.

By the way, there's an interesting project where CNNs are used to drive a car in a game simulator and predict the direction of movement.

Recurrent Neural Networks (RNNs)

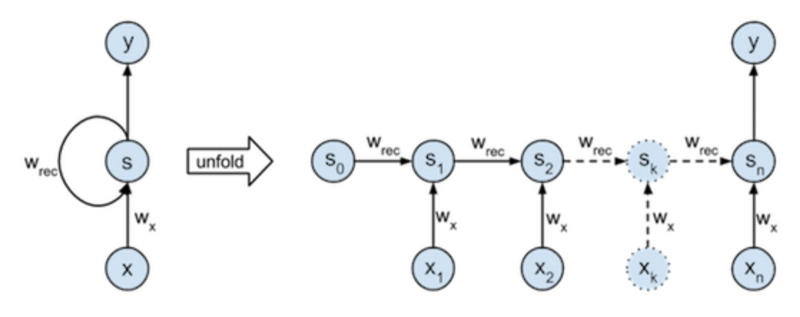

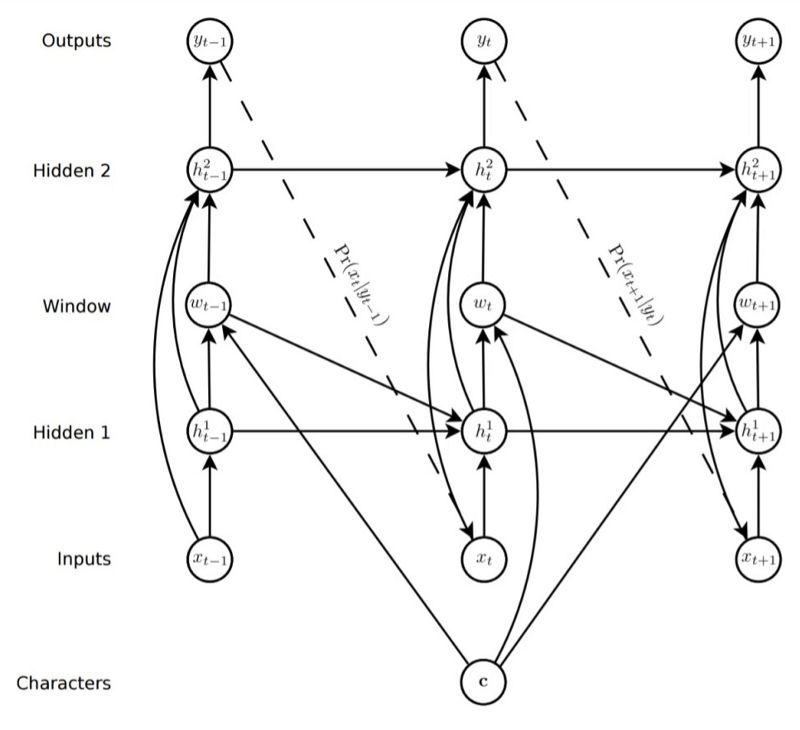

RNNs are designed for sequence generation and are trained to process one step of a sequence at a time, predicting the next step. Here’s a brief guide on how to implement such a model.

Assuming the prediction is probabilistic, the network iteratively samples from the output distribution and passes the sample to the next step as input. In this way, the network generates new sequences, essentially "dreaming" like a human.

RNNs can be used for language-driven image generation, such as generating handwriting based on text input. To handle this, the soft window of the text string is passed as additional input to the predictive network. The window parameters are output during prediction, allowing the network to dynamically align the text with the pen position. In short, the network learns to write the next character.

Given an input, a trained neural network can generate the expected output. If the network accurately models a known sequence, it can be used to predict future results. Stock market forecasting is a clear example of this application.

Applications in Different Industries

Neural networks are widely used in real-world business problems, such as sales forecasting, customer research, data validation, and risk management.

Marketing

Precision marketing involves market segmentation, dividing the market into different customer groups based on behavior. Neural networks can segment customers based on characteristics like demographics, income, location, purchase habits, and product attitudes.

Unsupervised neural networks can automatically group similar customer attributes, while supervised ones can learn the boundaries between segments by training on the same customer data.

Sales

Neural networks can consider multiple variables simultaneously, such as market demand, customer income, population, and product prices. For supermarkets, sales forecasting is particularly useful.

For example, if a customer buys a printer, they may return within a few months to buy a new cartridge. Retailers can use this information to reach out to customers before they switch to competitors, increasing the likelihood of repeat purchases.

Banking and Finance

Neural networks have been successfully applied in financial fields such as derivative pricing, future price forecasting, exchange rate prediction, and stock performance analysis. While traditional systems relied on statistical techniques, modern finance increasingly uses neural networks as the foundation for decision-making.

Healthcare

Research in medical applications of neural networks is growing rapidly. Experts believe that in the coming years, neural networks will be widely used in biomedical systems. Currently, most studies involve modeling parts of the human body and identifying diseases through imaging scans.

Conclusion

Perhaps neural networks can help us understand some of the “simple questions†of cognition—how the brain processes environmental simulations and integrates information. But the bigger question remains: why and how do humans experience life, and can machines ever achieve true self-awareness?

This makes us think about whether neural networks could assist artists in creating new visual concepts or even inspire us to reflect on the roots of our creative process.

In short, neural networks make computers more human-like, enhancing their usefulness. So the next time you think your brain might be as reliable as a computer, remember: there’s already a powerful neural network in your mind—be grateful for it!

I hope this beginner’s introduction to neural networks helps you start your first project in this exciting field.

FRP Fence,reed fence fencing,Vinyl Picket Fence,decorative picket fence

Hebei Dingshengda Composite Material Co., Ltd. , https://www.frpdsd.com