Cars have become an indispensable part of modern society, providing a low-cost means of transportation for goods and people around the world. Unfortunately, there are a large number of accidents and casualties every day due to vehicle driving. According to the World Health Organization, the number of deaths from global traffic accidents in 1998 was as high as 1.2 million. In 1990, traffic accidents were the ninth cause of human deaths worldwide. It is expected that it will further rise to the third leading cause of death by 2020.

This article refers to the address: http://

For these reasons, automotive original equipment manufacturers (OEMs) and their supplier partners, as well as global government agencies, have been working to develop and promote Active Safety and Advanced Driver Assistance Systems (ADAS), designed to reduce accident rates and Reduce the severity of the collision. Automotive industry analysts believe that by 2010, ADAS will become a top-notch new technology that not only helps drivers realize potential hazards ahead of time, but also passes lane departure warning system (LDWS), drowsiness detection and night vision systems. Technology potentially extends the driver's reaction time.

As consumers become more aware of ADAS and learn that it can provide greater security, they are expected to be more receptive to the technology, driving the market to grow. ADAS has been used in luxury cars. As the technology matures, it will gradually enter the mass market and be applied to ordinary vehicles, while the increase in output will significantly reduce product costs. As far as automotive OEMs are concerned, active safety and ADAS technologies will also help to differentiate distinctive products, given that passive safety systems are rapidly becoming the standard technology for automobiles.

ADAS overview

The design of the ADAS is not to control the vehicle, but to provide the driver with information about the surrounding environment of the vehicle and the running condition of the vehicle, to remind the driver of the potential danger, thereby improving driving safety.

ADAS applications use a variety of sensors to collect physical data about the vehicle and the environment surrounding it. After collecting relevant data, the ADAS system will use treatment techniques such as target detection, identification and tracking to assess hazards. We may wish to give two application examples to illustrate, one is the lane departure warning system (detecting the lane unintentional deviation, immediately alert the driver), and the second is the traffic sign recognition. When the lane departure warning system is activated, the system detects and tracks the lane conditions based on the vehicle position and notifies the driver when the vehicle crosses into the adjacent lane. In the case of traffic sign recognition, the system can identify the traffic sign and tell the driver the current maximum speed limit, or inform the driver of the specific section currently traveling.

Different systems typically require different types of sensors to collect environmental information. Let's take a few examples. For example, the lane departure warning system uses a CMOS camera sensor, the night vision system uses an infrared sensor, the adaptive cruise control system (ACC) usually uses radar technology, and the parking assist system uses ultrasonic technology. Although the technical details of different applications vary, the technical processing usually includes three stages: data acquisition, pre-processing and post-processing. The pre-processing stage performs full image processing functions, the data work intensity is large, and the structure is also relatively regular, including image transformation, stability, feature signal enhancement, noise reduction, color conversion, motion analysis, and the like. The post-processing stage performs feature tracking, scenario interpretation, system control, and decision making (see Figure 1). A sensor for collecting environmental information, regardless of its type, can obtain a data set that generates a base image.

![]()

Figure 1: The entire process of the active safety system

Identifying, tracking and evaluating driving-related objects is a complex task. Driving styles and conditions can affect the quality of the raw data collected by the sensor and can obscure the important details needed to identify and track objects. In different weather conditions (such as strong sunlight, rain, fog and snow), the driver's driving process is highly dynamic and the operation is unpredictable. In the face of more complex situations, all data must be processed in real time, and the processing delay must not exceed 30ms. A delay of half a second is likely to result in an accident and will not allow the driver to respond to the warning in a timely manner.

Every step from data collection to action requires powerful signal processing capabilities, so timely and accurate implementation of active safety and ADAS systems must require support from high-performance products. Designed and optimized for automotive safety applications, digital signal processors (DSPs) such as the TMS320DM643x DaVinci processor from Texas Instruments provide the performance needed to enable OEMs to bring active safety to the market. ADAS technology.

Dynamic flexibility

In addition to high performance, ADAS applications require a flexible architecture to meet multiple functional requirements. For example, traffic signs in different countries vary from language to text, shape, and color. We must provide enough flexibility to reuse the technology as much as possible across different product lines and to advance technology innovation at a low cost, at the normal speed, according to emerging market requirements. Software innovation is the most efficient way. The DaVinci processor uses a software-programmable architecture that gives developers the flexibility to support ever-changing algorithms.

Let us imagine a pre-processing algorithm without considering the driving conditions (such as strong sunlight). Some car suppliers use an algorithm, others use an algorithm during the day and another algorithm at night. In fact, for a variety of different driving conditions, we will need a variety of different pre-processing and post-processing algorithms. The system must also have the ability to adapt quickly, for example, when the vehicle enters the ramp, it will instantly switch from daytime driving mode to night driving mode.

Please note that different sensors of the vehicle should perform different functions (see Figure 2). For example, the lateral sensor performs blind spot detection; the forward sensor is responsible for vehicles, lanes, traffic signs and pedestrian identification; the in-vehicle sensor detects whether there is someone in the car, whether the driver is drowsy or what he wants to do.

Figure 2: Multiple sensors installed in the vehicle perform various specific tasks

In addition, different sensors should handle different types of data. The execution of some traffic sign recognition algorithms relies primarily on the color of the logo, in which case the forward sensor should support a wide range of colors. On the other hand, grayscale sensors are more sensitive to changes in brightness, and their spatial resolution is almost twice that of color sensors. The implementation of most ADAS functions relies mainly on the sensitivity of the sensor, so a grayscale camera is more suitable. We also note that image sensors for ADAS applications typically have a high dynamic range, typically more than 8 bits per pixel, which is also important.

The most effective way to solve technical problems is to execute multiple algorithms on a single DSP. For example, a forward image sensor can simultaneously provide video information required for lane departure warning and traffic sign recognition. Ideally, a single DSP can perform pre-processing and multiple identification tasks under all driving conditions, such as lane departure warning and traffic sign recognition, which helps to reduce the number of chips, thereby reducing the point of failure and improving system reliability. And reduce system costs, which are critical to the development of automotive applications.

In order to improve the robustness of the ADAS, we also need to coordinate between all active safety subsystems inside the vehicle. For example, the driver's direction of attention and focus will directly affect the effectiveness of traffic sign warnings. For example, if the driver is driving normally, he should be able to notice the sign in front and slow down, but the system will issue a warning too early. This will not only be detrimental to driving, but will cause trouble to the driver. Therefore, before issuing a warning signal, we must collect information from both the traffic sign recognition system and the in-vehicle driver monitoring system. If the driver monitoring system reflects that the driver is heading in the direction of the road, then we do not have to immediately issue a warning that there is a stop sign in front.

The robust performance of the ADAS system can even assess complex driving environments. For example, if the car is quickly approaching a stopped or decelerated vehicle, then an emergency line change is likely. In this case, we should suspend the lane offline warning to avoid distraction when the driver changes the line. Of course, if the blind spot monitoring system detects that there are other vehicles next to the car, the system should issue a warning.

System-on-chip architecture improves design efficiency

The current system-on-chip (SoC) architecture further enhances design efficiency by integrating all of the peripherals required for the entire video/image processing system on a single chip. With support for a wide range of peripherals, today's highly integrated devices can be easily connected to other parts of the vehicle system. For example, SoCs can provide direct video output for applications such as parking assisted rearviewers, or directly to the vehicle's main control system via appropriate buses such as CAN, LIN or FlexRay.

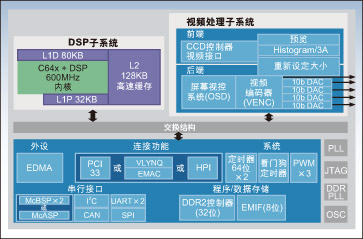

The SoC architecture provides dedicated functionality without adding ASIC costs. With proper processing, the SoC can maintain the flexibility of a programmable software architecture, unlike fixed ASICs. For example, TI's DaVinci processor uses a powerful video front end to free the main CPU from critical and cumbersome pre-processing tasks (see Figure 3). Specifically, the video front end provides a zoom function block that resamples the image (zooms in or out) at the appropriate resolution without taking up DSP cycles. Scaling the image is necessary because the size of the object in the video frame will vary as the car approaches the object.

Figure 3: Block diagram of the TMS320DM6437 digital media processor

The DaVinci video front end also provides a histogram to show the pixel fluorescence intensity distribution of different video frames. From the pixel fluorescence intensity map, we can see the quality of the captured image. For example, if the image is too dark, the DSP can adjust the contrast to improve the accuracy of the process. The front end can also complete the color gamut conversion without occupying the main CPU. The combination of these integrated modules significantly reduces CPU load, allowing developers to integrate more ADAS value-added features on a single DSP.

The SoC architecture should be designed to ensure efficient data transfer. As with any video application, the more data is transferred, the longer the processing delay. In order to improve system performance and maximize the use of primary memory resources, developers should generally limit processing to specific areas of interest to ensure that the image blocks to be processed are significantly smaller than the size of the entire image during processing and evaluation. For example, in the lane recognition and tracking process, this part of the frame can be deleted because there is no corresponding data on the road surface.

To support this type of data transfer, SoCs require a multi-channel, multi-threaded direct memory access (DMA) engine. The DMA controller should support multiple transmission geometries and transmission sequences. The transmission technology of the previous generation DMA controller (such as the EDMA2 controller on the previous generation TI chip) is limited to the two-dimensional function, and the data source shares the index parameter with the destination. In contrast, the EDMA3 controller on the DaVinci processor supports independent source and destination indexing as well as three-dimensional transmission.

In addition to video input and processing functions, applications such as parking assistance require video output. Even if there is no video output design for production, the video output function is quite useful during the development and system debugging phases. To support video output, the DaVinci processor uses video processing back-end technology, which includes an on-screen video control system (OSD) and a video encoder (VENC). The OSD engine can handle two separate video windows and two separate OSD windows. VENC offers four analog video outputs that support up to 24 digits of digital output in a variety of formats.

Simplified design

ADAS applications are fast-growing, cutting-edge technologies. Developers should use tools that simplify development and help speed up prototyping, so the ADAS algorithm development process can be optimized with C or Simulink, MATLAB and other modeling software. Of course, a well-run system requires more than just an algorithm. Therefore, having off-the-shelf software components such as real-time kernels and peripheral drivers is critical. Of course, off-the-shelf dedicated development tools and algorithm libraries are equally important. Not only do these components reduce months of ADAS development time, but they also get support from DaVinci processors.

In short, suppliers must pass the industry's integrated circuit quality standard AEC-Q100 certification if their products are suitable for automotive applications. However, it is very difficult to pass the certification unless the design of the relevant solution takes into account the certification requirements from the outset. Suppliers can choose components such as the DM643x DaVinci processor that are designed to meet AEC-Q100 standards, ensuring that they successfully meet automotive automotive quality certification requirements.

Engineers and industry leaders should now further reduce traffic accident mortality through active safety and ADAS technology. With high-performance SoCs based on DSP's flexible architecture, dedicated development tools and software libraries, and innovative algorithms, automotive suppliers and OEMs can bring stable, reliable ADAS applications to the market.

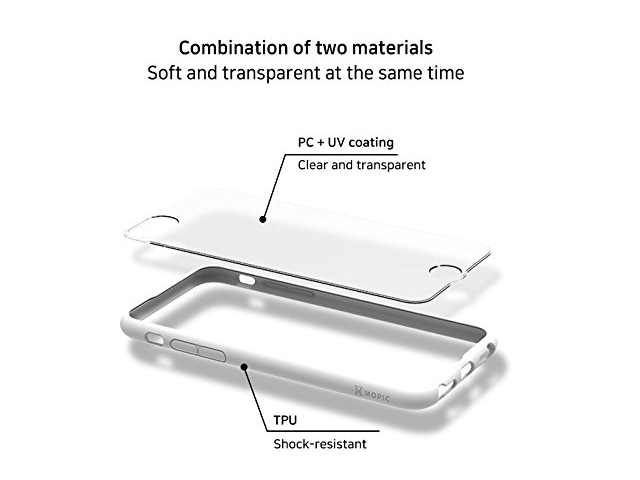

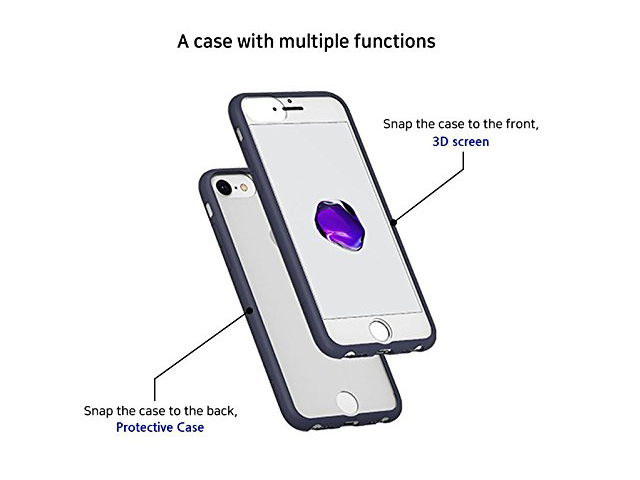

We are a professional manufacturer of snap 3D cases for Iphone. Our company are located in Korea.

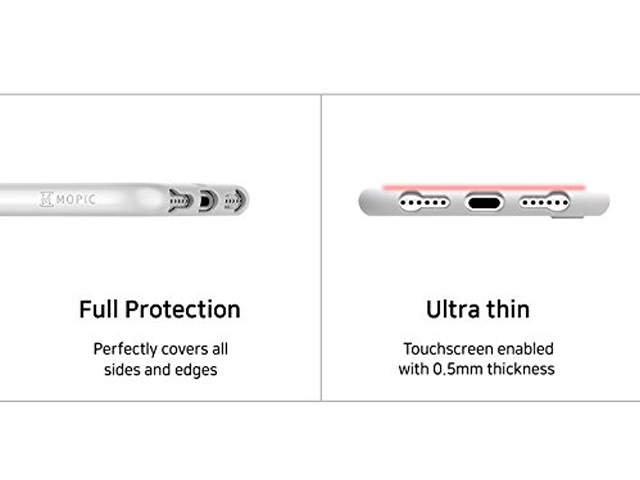

Iphone snap 3D case is a magic phone case, but you can use it to watch 3D movies, games, vedios wherever you go. The traditional VR headset is too hearvy, but this phone case is light weight, you can throw away the traditional VR glasses for smatphones right now, and try our iphone 3D viewers, your will drop in it!

For more information about how it works, please read the following descriptions:

HOW TO USE THE SNAP3D AS A 3D SCREEN

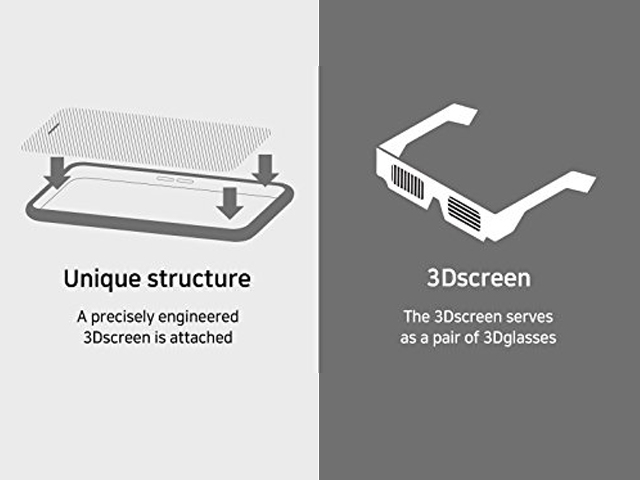

The attached 3D screen on the Snap3D acts like 3D glasses.

To turn your smartphone into a 3D device, you need to first download the [Mplayer3D" application.

Then install the Snap3D on your smartphone screen and run Mplayer3D.

Enjoy the stunning stereoscopic 3D!

About Mplayer3D

An application that transforms your smartphone into a 3D TV.

Can be download for free.

Supports video streaming on YouTube

A video player which DOES NOT support contents.

Provides demo videos only in the download section.

Supported format: MP4

DOES NOT support 2D to 3D conversion.

Iphone 8 Snap3D,Snap3D For Iphone 8,Iphone 8 Snap3D Case,Iphone 8 Snap 3D Viewer

iSID Korea Co., Ltd , https://www.isidsnap3d.com